In spoken language, these expressions also play an important role, but they function differently.

| Problem #3: 2nd-language learners of ASL (the 3rd most studied “foreign” language, with US college enrollments >107,000 as of 2016) have difficulty learning to produce these essential expressions, in part because they don’t see their own face when signing. |

To address these problems, we are creating AI tools (1) to enable ASL signers to share videos anonymously by disguising their face without loss of linguistic information; (2) to help ASL learners produce these expressions correctly; and (3) to help speech scientists study co-speech gestures.

We have developed proof-of-concept prototypes for these three applications through NSF Phase I funding (grant #2040638).

The foundation for the applications just described is provided by development of new AI methods for continuous, multi-frame video analysis that ensures real-time, robust, and fair AI algorithm performance. The application design is also guided by user studies.

1) The Privacy Tool will allow signers to anonymize their own videos, replacing their face while retaining all the essential linguistic information.

2) The Educational Application will help learners produce nonmanual expression and assess progress by enabling them to record themselves signing along with target videos that incorporate grammatical markings. Feedback will be generated automatically based on the computational analysis of the students’ production in relation to the target.

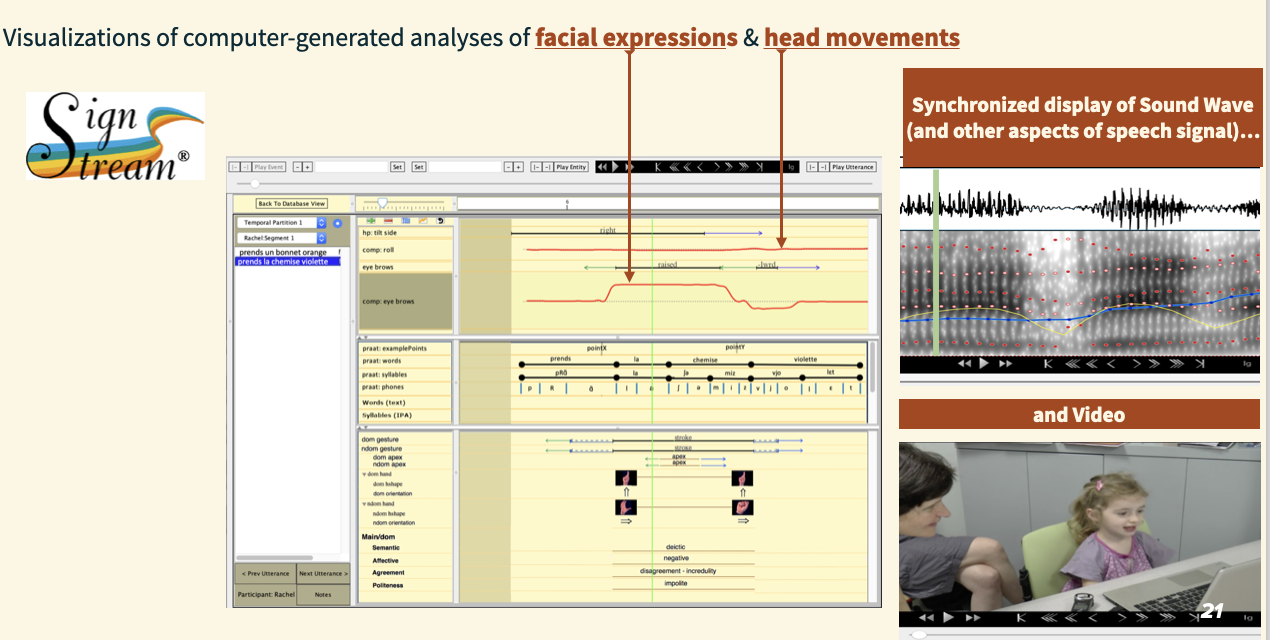

3) The Research Toolkit includes: (a) a Web-based tool to provide computer-based 3D analyses of nonmanual expressions from videos uploaded by the user; and (b) extensions to SignStream® (our tool for semi-automated annotation of ASL videos, incorporating computer-generated analyses of nonmanual gestures) to accommodate speech, and to our Web platform for sharing files in the new format. These software and data resources will facilitate research in many areas, thereby advancing the field in ways that will have important societal and scientific impact.

Differentiators

(1) The proposed AI approach to analysis of facial expressions and head gestures—combining 3D modeling, Machine Learning, and linguistic knowledge derived from our annotated video corpora—overcomes limitations of prior research. It is distinctive in its ability to capture subtle facial expressions, even with significant head rotations, occlusions, and blurring. This will have other applications, too: e.g., for sanitizing other data involving video of human faces, medical applications, security, driving safety, and the arts.

(2) The applications themselves are distinctive: nothing like our proposed deliverables exists.