Industry Connections: 3 Questions with Jeremy Doig

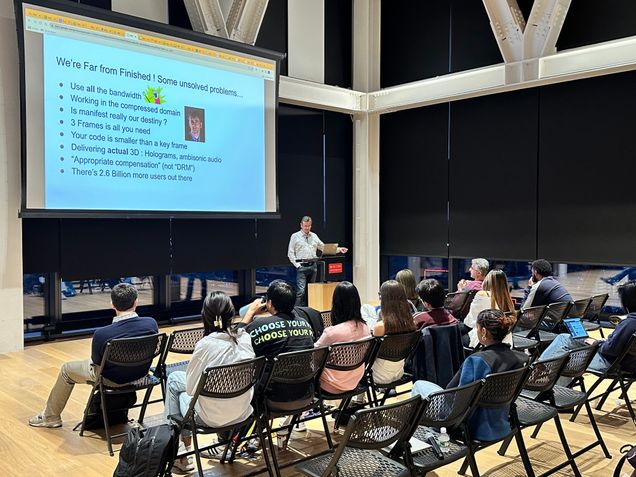

Jeremy Doig hosted an Industry Connections presentation, "The Evolution of Media Technology for the Online World," in November 2023.

Jeremy Doig remembers the moment his interest in computing and technology took flight: he was 12-years-old, tinkering with a personal computer at a friend's house. His curiosity shifted to writing programs in a notebook, to pursuing a formal education, and later landing prominent positions at BBC, Apple, Microsoft, Google, and Disney. Throughout his career, Doig has been a trailblazer in establishing fresh benchmarks for online media, including innovative techniques for audio and video compression, real-time and on-demand streaming protocols, and immersive spatial experiences.

Jeremy Doig remembers the moment his interest in computing and technology took flight: he was 12-years-old, tinkering with a personal computer at a friend's house. His curiosity shifted to writing programs in a notebook, to pursuing a formal education, and later landing prominent positions at BBC, Apple, Microsoft, Google, and Disney. Throughout his career, Doig has been a trailblazer in establishing fresh benchmarks for online media, including innovative techniques for audio and video compression, real-time and on-demand streaming protocols, and immersive spatial experiences.

When reflecting on his lifetime passion and professional career, Doig said he feels fortunate to work in a field he loves. "I'm so fortunate to be in a position where for most of my working life, I've always wanted to go to work," he said. "I love this stuff. It's never really been a job for me - it's a hobby I get paid for."

Doig will be sharing his experiences, expertise, and job market insights as a CDS Industry Connections speaker on November 7. His talk, "The Evolution of Media Technology for the Online World," will highlight the origins of and motivation for Google's significant investment in free, open-source media technologies and how the industry needed to change. He will also cover new areas of development and opportunities for engineers entering the field.

In this "3 Industry Questions" feature, Doig discusses his childhood influences, highlights his job market observations, and offers advice to students entering the field.

What inspired you to enter the field of computing and technology?

When I was 12, I used a ZX80 personal computer at a friend's house (in their living room on the carpet, in front of their TV). It changed my life. It was affordable (I calculated how many cars I needed to wash to pay for it, and how many weeks that would take to save up). It was here that I figured out, just from screenshots of the keyboard, how the basic programming language worked.

When I was 12, I used a ZX80 personal computer at a friend's house (in their living room on the carpet, in front of their TV). It changed my life. It was affordable (I calculated how many cars I needed to wash to pay for it, and how many weeks that would take to save up). It was here that I figured out, just from screenshots of the keyboard, how the basic programming language worked.

By the time I had saved enough, I could spring for the ZX81, and I went from BASIC to Z80 assembler pretty quickly. While I was waiting (many months) for the computer to be delivered, I wrote programs (mostly games) in a notebook, working through them and looking for bugs.

Looking back, the things that were so amazing for me then were: Computers were machines that let you do anything. No matter what field of expertise you were interested in, computers were absolutely going to be part of that industry. So, being a programmer (what we call software engineer today) was the ultimate meta-career. You could become an expert in any business domain, but use computing as the base of that understanding. To me, it was the most powerful job in the world, and that's what I wanted. Another aspect was that the community of programmers was not based on any form of elitism - it was for hobbyists (nerds ?), and while we were all very different people, we shared the same excitement for the domain. And so, it was powerful and it was for everyone.

How would you describe the computing/tech job market today? And what skills do you think are critically necessary to possess before graduation?

How would you describe the computing/tech job market today? And what skills do you think are critically necessary to possess before graduation?

The market in 2023 is tough for sure. We are in a period of extreme fiscal constraint but these cycles come and go, and you have to not be a victim. So, in terms of how to position yourself most favorably in the current market, I would say:

Broaden your skillset. Be knowledgeable about everything from typescript and javascript to react/native, django, python, sql, pytorch and tensorflow. Also, know how to deploy on cloud systems, CI/CD, app stores. I know that sounds a lot, but the modern systems (gitlab/github, cloud services) make it all quite accessible. You need to be the person who can say "I can do that". Everything you need to know is freely available to learn online. All you need is your desire to learn and a bit of time.

Be the assertive voice in the conversation. With regard to machine learning, there's so much froth in the market right now. What actually is viable? How does this stuff work ? What should you do yourselves ? What even is a "model" or a "system" or a "layer"? What's the difference between CNN's, DNN's or RNN's? How do LLM's actually work? As such, what are they good for? You can learn all of this online. But you have to go deep. You need to ACTUALLY know what you're talking about, and that comes through implementing something and refining it. Is all of this a lot to ask of graduates ? I'm sorry, but no, not at all. This is the price of entry to the market. And it will actually put you in a better position than most people already in this market.

What advice do you have for computing and data science undergraduate and graduate students?

Apart from the above (which is a lot), I would say: Contribute to open source projects. Real contributions, over a period of time - ideally years. There is no better resume than real code. And if you can't code, you can make the website better, create documentation, how-to videos, deal with customer/community support. Contributing to OSS is a public internship. For example, look at ffmpeg (and associated video projects). Most of the world's streaming systems use these codebases. If you can successfully contribute to open source video, your code will be used by billions of people worldwide in a matter of months. Where else would you get that kind of opportunity ? If you want to make your mark on the world, Open Source is wide open for you. Right now.

- Maureen McCarthy, Editor, CDS News