How Can We Tackle AI-Fueled Misinformation and Disinformation in Public Health?

UN Under-Secretary-General for Global Communications Melissa Fleming Shares Strategies for Using Fact-based Science Communications to Build Trust

In the post-pandemic era, artificial intelligence (AI) has played a major role in the dissemination of information. AI has also facilitated the spread of misinformation and disinformation across social media platforms through algorithms that amplify posts designed to generate outrage and de-prioritize posts from institutions, like the United Nations, which works to dispel these false narratives. From hate speech to conspiracy theories, AI-fueled misinformation and disinformation serves to polarize society and create a harmful online environment. The World Economic Forum identified the threat from misinformation and disinformation as the most severe short-term threat facing the world today.

Melissa Fleming, UN Under-Secretary-General for Global Communications and BU Alumna (COM ‘89), gave a talk at Boston University on April 4th where she shared insights into the United Nations’ perspective on harnessing AI to build resilience in global communication. Fleming’s lecture discussed the challenges and opportunities of AI in disseminating accurate global public health communication, particularly in the areas of vaccines, climate change, and well being of women and girls.

With the advent of AI, “those who might have been screaming in a park with an audience of three now have the ability to reach thousands, if not millions of people,” said Fleming. “One of our biggest worries is the ease with which new technologies can help spread misinformation easier and cheaper, and that this content can be produced at scale and far more easily personalized and targeted.”

Polarization and Mistrust about Vaccines

Fleming highlighted that one of the most pronounced areas where misinformation has taken root is in the realm of vaccines. Especially since the pandemic, misinformation about vaccines has skyrocketed, and the use of AI has only made this easier and cheaper. In fact, if someone searches for information about vaccines online, there is around a 78% chance of finding misinformation or disinformation. Fleming also shared that because information surrounding vaccines has become polarized, many celebrities and influencers are unwilling to promote the benefits of vaccination for fear of being attacked. As a result, outbreaks of fully controlled diseases have become more prevalent, including the measles outbreak in Florida in February 2024.

During the pandemic, the UN released media literacy campaigns such as the Verified and the #TakeCareBeforeYouShare campaigns. Both of these are designed to make users online stop and think before posting or re-sharing content that could be misinformation. These campaigns aim to create a more informed and engaged public online. Fleming also mentioned a UN volunteer program looking for “digital first responders,” essentially volunteers aiming to research information before posting and promoting the dissemination of accurate content.

Climate Deniers

Another area rampant with misinformation is climate change. Fleming described “merchants of outrage,” or people who understand how to work the algorithm – i.e., release content designed to outrage and polarize viewers – and then make money off the interactions with their posts. This means that there is a financial incentive for some to spread misinformation online.

“Since Elon Musk took over X (formerly known as Twitter), all of the climate deniers are back, and [the platform] has become a space for all kinds of climate disinformation,” said Fleming. “There is a connection that people in the anti-vaccine sphere are now shifting to the climate change denial sphere.”

After Musk fired his trust and safety team, the amount of misinformation on the X platform – especially pertaining to climate change – skyrocketed. A trending hashtag on the platform was #ClimateScam, a section rampant with disinformation and misinformation about the science behind climate change.

As is the case with the vaccine sphere, AI makes misinformation about climate change easier and cheaper to produce, which in turn reaches a wider audience and diminishes trust in public institutions.

Instead of trying to deny every false claim about climate change, Fleming and her team focus on promoting accurate and fact-based information in order to give institutions like the UN more agency. This approach also creates more positive interactions with communities on social media platforms.

Fleming illustrated the strategy using an example of a false claim like solar panels not working in the rain. “Instead of putting an X over the post, we are trying to find clever, positive, solutions-focused approaches to promote content on the benefits of renewable energy,” said Fleming. “This content subtly addresses the disinformation and injects climate solutions and fact-driven arguments into the posts. This approach gives people solutions for the future, and makes our future selves say thank you.”

Online Misogyny’s Impact on Women and Girls

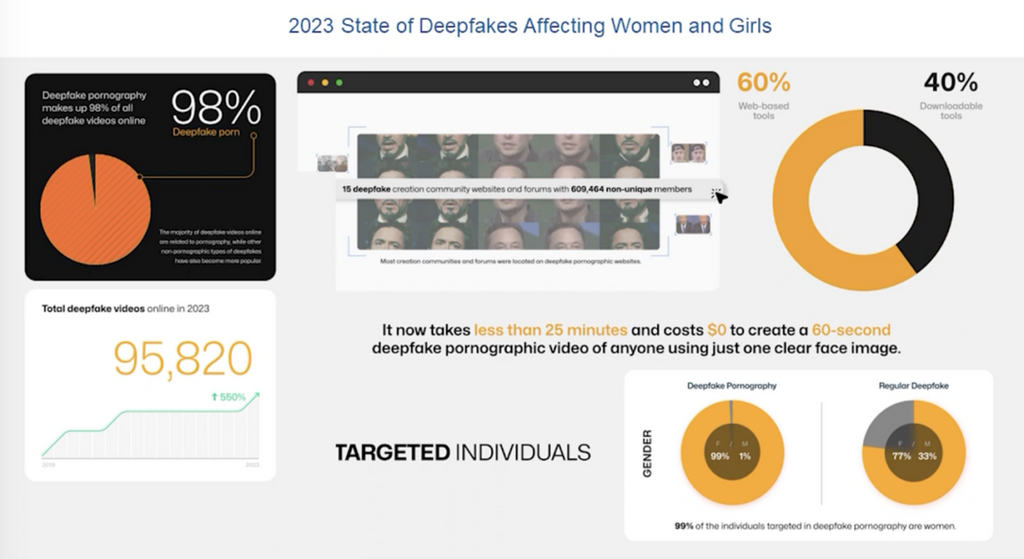

Generative AI is also being used to silence, intimidate, and humiliate women and girls, said Fleming. Online misogyny, including death threats, rape threats, and humiliated doctored photos, is increasingly being used as a weapon to shut down critics, such as leaders, politicians, activists, journalists, and other women. Fleming cited a 2022 UNESCO survey of female journalists around the world shows that 73% experienced online violence and 20% were attacked offline but felt there was a link. AI generated images are easy to create and monetization of online misogyny an incentive.

“Generative AI tools have handed these bad actors a new arsenal of weapons with deepfake technology,” said Fleming. “Deepfakes are being used to generate non-consensual pornographic images to shame and humiliate women and girls in all walks of life. While some AI softwares have tried to build in safeguards to prevent images like this from being made, most AI built-in safeguards are currently either non-existent or very easily bypassed.”

Reclaiming the Conversation

Fleming acknowledged that AI certainly is not going away and that there are many ways in which AI can be deployed to be part of the solution. She and her team and working to build coalitions and initiatives that leverage AI to promote exciting, positive, fact-driven global public health communications.

The Humanly Possible campaign, launched April 24, is an example of this strategy. This campaign will release statistics on how many lives have been saved as a result of being vaccinated, and encourages people to share vaccine stories. It aims to “celebrate vaccines as one of humanity’s greatest achievements, up there with landing on the moon,” said Fleming.

Other initiatives include creating an official UN code of conduct on information integrity in the digital space, and meeting with UN member states at a general assembly to promote safe, secure, and trustworthy AI systems.

Fleming invited us to “reclaim the conversation… by joining forces to spread the word and becoming the masters of the algorithm.”

This guest lecture was cosponsored by the Center on Emerging Infectious Diseases (CEID) and the Hariri Institute for Computing.

![]() Watch the full lecture given by Melissa Fleming, UN Under-Secretary-General for Global Communications, here.

Watch the full lecture given by Melissa Fleming, UN Under-Secretary-General for Global Communications, here.