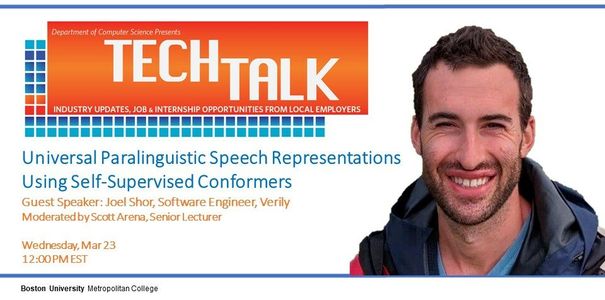

Universal Paralinguistic Speech Representations Using Self-Supervised Conformers | March 23, 2022

Guest Speaker: Joel Shor, Software Engineer, Verily

Moderated by Scott Arena, Master Lecturer

March 23, 2022

Abstract: In recent years, we have seen dramatic improvements on lexical tasks such as automatic speech recognition. However, machine systems still struggle to understand paralinguistic aspects — such as tone, emotion, whether a speaker is wearing a mask, etc. Understanding these aspects represents one of the remaining difficult problems in machine hearing. In addition, state-of-the-art results often come from ultra-large models trained on private data, making them impossible to run on mobile devices or release publicly. In “Universal Paralinguistic Speech Representations Using Self-Supervised Conformers”, to appear in ICASSP 2022, we describe an ultra-large self-supervised speech representation that outperforms nearly all previous results in our paralinguistic benchmark, sometimes by large margins even though previous results are often task-specific.

Joel Shor studied mathematics, physics, and computational neuroscience at Princeton. He worked for an image-search startup before joining Google California, where he worked on image compression and speech synthesis. He then moved to Google Israel to start an audio biomarkers team, and co-started project Euphonia, which gives people who have lost their voice due to medical illness back the ability to speak. In 2020, he moved to Japan and helped start an AI for Social Good team in Google Japan, which used technology to improve society, including launching a Covid forecasting model which helped shape Japan’s government response. He now works at the Life Sciences company Verily in Boston, where he uses voice, image, and video features to improve diagnostic tools for physicians.

Students are encouraged to prepare their resumes for TECH Talk events to discuss job and internship opportunities.