The Universal Decoder That Works in One Microsecond

By Patrick L. Kennedy

Assistant Professor Rabia Yazicigil (ECE) and colleagues from MIT and Maynooth have developed the first silicon chip that can decode any error-correcting code—even codes that don’t yet exist— potentially leading to faster and more efficient 5G networks and connected devices. “This could change the way we communicate and store information,” says Yazicigil, who presented the results of the chip measurements at the recent IEEE European Solid-States Device Research and Circuits Conference.

An error-correcting code is not the kind of code you’d use to direct spies or spell out a message about Ovaltine. Rather, it’s what protects the data you send—be it text message or video file—from random electromagnetic interference as it travels across the globe, passing through waves of competing signals from cell towers, solar radiation, and other noise. Those disturbances can corrupt the signal by flipping bits—from a 1 to a 0 or vice versa.

For decades, communication engineers have gotten around this problem by adding code to the data—essentially a redundant string of bits (or “hash”) at the end of each message. Complicated algorithms enable the receiver to use that code to determine what errors occurred, if any, and to reconstruct the original message. The drawback is that each code is designed for one of several commercially agreed-upon codebooks, and so far each codebook has required a separate chip or other piece of hardware.

As a powerful alternative, Yazicigil’s colleagues Muriel Médard at MIT and Ken R. Duffy at the National University of Ireland at Maynooth have hit upon an algorithm called Guessing Random Additive Noise Decoding (GRAND).

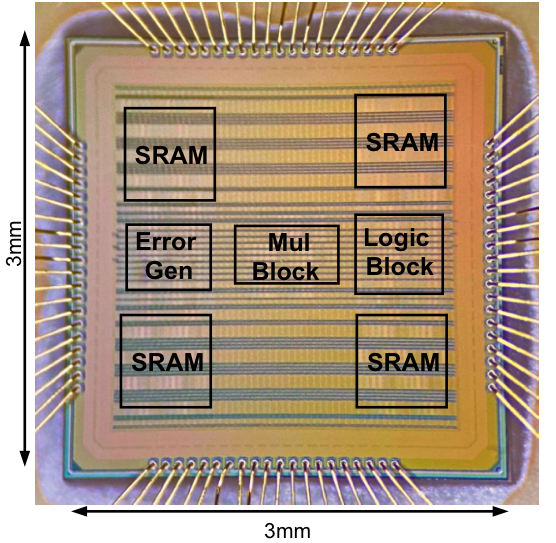

“As compared to the traditional decoders, the GRAND decoder uses an entirely different approach, independent of the code structure, that will revolutionize communication systems,” says Yazicigil, whose team at BU developed the first hardware realization of the GRAND algorithm. With students Arslan Riaz and Vaibhav Bansal, Yazicigil successfully designed the chip in 40-nanometer CMOS technology, eliminating the need for code-specific decoders and enabling universal decoding of any data with little lag time.

“Instead of using code-specific hashes to decode the message, we intelligently guess the noise in the channel, then check whether the data is correctly retrieved to deduce the original message,” says Yazicigil. “That’s why GRAND is a universal decoder—because the noise in the channel affects the transmitted messages independently of what codebook you’re using.”

The GRAND chip “guesses” the noise by rapidly cycling through all possible noise patterns, from the most likely to the least. “Typically, you have zero errors, so that’s the most likely noise sequence; then the next most likely is to have one bit flipped,” says Yazicigil. “Then two bits, and so on.” GRAND subtracts the result from the received data to reveal the original message. Then to verify the result, the chip checks it against a codebook. (The chip supports multiple codebooks and can load two of them at a time, switching seamlessly from one to the next.)

The whole process takes roughly a microsecond on average and consumes only 30.6 pJ energy per decoded bit, with a 3.75-milliwatt of average power from a 1.1V supply voltage. The team demonstrated that the GRAND chip could decode any moderate redundancy code up to 128 bits in length with good decoding performance when compared to any standard code-specific decoder.

These findings have implications for data storage, connected devices, remote gaming, movie streaming, and any forthcoming improvements in the world’s communication networks.

“Because this one piece of hardware is compatible with any coding schemes that will be developed, it’s future-proofing too,” says Yazicigil. As the team continues to refine GRAND and its chip, “We can provide adaptability to different standards, different technologies, 5G, 6G, or whatever comes next,” she says.

The research was funded by the Battelle Memorial Institute and Science Foundation of Ireland. Médard is the Cecil H. and Ida Green Professor in MIT’s Department of Electrical Engineering and Computer Science, and Duffy is director of the Hamilton Institute at Maynooth.

Graphic by Gabriella McNevin-Melendez using photos from Unsplash by Robin Worrall and Alexander Sinn.