Neuroimaging in the Everyday World

The Need: How a healthy brain works and what can be done to prevent and treat acquired and developmental brain disorders is one of the primary aims of contemporary neuroscience research and a major focus of the BRAIN Initiative. The only way to catalyze such innovative scientific breakthroughs is to integrate multi-disciplinary teams that can enhance current neuroscience technologies and apply them to healthy and clinical populations rapidly and effectively. The current state of the art, led by fMRI, has driven profound advances in our understanding of brain function under well-controlled and constrained conditions. For instance, we now have great insights into the healthy functioning brain neurodevelopment 1, perception and cognition 2 and motor control 3. Moreover, these insights have led to several advances in characterizing, diagnosing and in developing potential targeted interventions for brain disorders such as autism spectrum disorder 4–7, Parkinson’s disease 8, traumatic brain injury (TBI) 9,10, stroke 11,12 and chronic traumatic encephalopathy 13. While we better understand how the brain functions in single-snapshot experiments under restricted lab settings, we do not know how it works in dynamic, complex and multisensory real-world environments. The challenge to pushing this important work forward is to have the capability to continuously track human brain function and behavior in real time to understand how a healthy brain works and how and when failures in simple human actions occur. The BU Neurophotonics Center’s (NPC) “Neuroimaging in the Everyday World (NEW)” technology, being developed under NIH BRAIN Initiative Contract U01-EB029856 will make this possible and bring about a revolution in functional brain imaging as big as that brought about by fMRI.

The Solution: Functional Near Infrared Spectroscopy (fNIRS) and EEG are two safe and non-invasive neuroimaging techniques that enable brain imaging under naturalistic settings. fNIRS is an alternative to fMRI that maps hemodynamic responses to brain activity and allows researchers to collect data in ecologically valid settings, and is less susceptible to motion artifacts than fMRI, and thus has great potential for studying natural behaviors and neurological populations 14. EEG measures fast electrical responses associated with neuronal activity and has been widely used for studying brain activity in naturalistic environments. EEG and fNIRS complement each other through combining EEG’s ability to capture neural activity with millisecond time scale and fNIRS’s ability to measure slow and integrated hemodynamic changes with better spatial localization. Driven by prior NPC research efforts, we now realize that it is a straightforward engineering effort to make a wearable system that optimally combines fNIRS with EEG and with eye-tracking/pupillometry (to time-lock the brain responses to behavioral data) to obtain a more complete picture of brain activation patterns in the Everyday World through vascular and neural responses and their interactions through 24 hour recording systems (see illustration of the proposed solution in the figure below). Such a system will significantly broaden the spectrum of fNIRS applications by allowing studies of brain function in more natural environments (social interactions, outdoor walking), and more efficient monitoring of patients with neurological disorders (stroke, Alzheimer’s, Parkinson’s, concussion) and of normal/abnormal brain development (autism, language development). Through our integrated measurement of behavior and brain activation patterns, we will be able to track the breakdown of normal brain function in clinical populations as patients go about their daily lives, revealing brain signatures of neurological dysfunction. Such insights will be crucial to guiding novel approaches of treatments for these individuals.

Why BU and Why Now: Integrating BU’s strengths in Photonics, the Neurosciences, and the Data Sciences, the Neurophotonics Center (NPC) was launched in 2017 to catalyze and translate innovative scientific breakthroughs in the neurosciences by integrating multi-disciplinary teams that can advance neurophotonic technologies and apply them to healthy and clinical populations rapidly and effectively. At the same time, an NSF Research Training (NRT) grant was awarded to BU to promote training in Neurophotonics and this has helped to grow a community of more than 70 trainees from across 10 departments. In addition to enhancing the training opportunities and quality at BU, this NRT grant also strengthens the interactions amongst NPC faculty and their research teams. At present, the majority of engaged NRT trainees and NPC faculty are researching in animal models, but BU has equally many trainees and faculty focused on human studies who would benefit from engagement in the BU Neurophotonics Community. Indeed, we are seeing a growing number of these researchers joining this community because of interest in utilizing the NPC’s fNIRS expertise to expand the impact of their human research activities.

Leveraging BU’s international leadership in fNIRS development and application; its leadership in perceptual and cognitive neurosciences and rehabilitation with faculty at the Center for Systems Neuroscience, Cognitive Neuroimaging Center, and the Center for Neurorehabilition; and its strength in the Data Sciences to develop, apply, and optimize our NEW technology, the NPC is creating a BU-centered hub of activity with a growing breadth of neuroscience applications and data science problems that will engage a large cohort of researchers at BU, throughout Boston, and across the world.

Our Plan: The NPC is developing the “Neuroimaging in the Everyday World” hardware, demonstrating its utility in performing unconstrained measurements outside of the lab and in the Everyday World, and advancing the signal processing algorithms needed to analyze and interpret the experimental measurements. Further, to realize the full impact of this technology, the NPC is growing its training and dissemination efforts. The NPC efforts capitalize on four distinct strengths and innovative thrusts at BU.

Aim 1: Engineering Innovation – Development of Multi-Modal fNIRS/EEG for Continuous and Long-Term Recordings in the Everyday World: The key outcome of this thrust is to develop a customized device by incorporating high-density fNIRS and EEG with Tobii eye-tracking glasses into a wireless and fiberless hybrid headset that ensures high performance and usability suitable for application in the Everyday World.

Aim 2: Innovation in Real World Behavioral Measurements – Translation of Existing Laboratory Behavioral Tasks to the Everyday World: We will measure brain activity during walking, perceiving, and interacting, with experiments gradually increasing in complexity from lab to real world settings in young healthy adults. Studies performed in clinical populations such as individuals with Parkinson’s disease and stroke will provide proof of principle that untethered functional neuroimaging studies can be performed in clinical populations to understand breakdowns in the diseased compared with the healthy brain, laying the groundwork for future efforts in neuro-feedback for rehabilitation. A key outcome is that we will measure the Everyday World with our fNIRS system on participants going about everyday activities that combine walking, perception, and interaction tasks.

Aim 3: Data Science Innovation – Development of Multimodal Methods for Neuroimaging in the Everyday World: We will innovate and address challenges in the collection and analysis of the large datasets of multimodal signals acquired in our experiments through novel data science approaches: 1) Signal components have to be reliably separated into evoked brain activity, local and systemic physiological interference and non-physiological noise, to reject artifacts from movement/interaction and the environment; 2) The Everyday World yields a plethora of sensory stimuli that generate the context for linking brain activity and behavior, requiring us to generate context from the acquired video, eye-tracking and audio data using available cutting-edge computer vision and text to speech solutions to provide triggers to guide analysis of the brain activation signals. A key outcome is to fuse and exploit the combined strengths of high-density fNIRS, EEG, and auxiliary biosignals using novel machine learning driven multimodal signal processing approaches and computer vision to work towards automated labeling of stimuli.

Aim 4: The NEW Hub of Activity: We will create a strongly inter-linked and continuous training program for BU researchers to enter and impact this new field. We will start a week-long summer school for researchers from around the world wanting to enter this field, and follow this with a 2-day conference on Neuroscience in the Everyday World. We will disseminate the hardware and software technology through open-access and commercial entities.

This new technology is a game changer in terms of continuously recording brain function that opens the door to a new frontier of investigation of how the brain functions and when and why breakdowns in perception, walking, and communicating occur. These novel approaches, once validated, will create a new area of research, the Neuroscience of the Everyday World; accelerated by our commitment to openly disseminating the technology. This work will ultimately lay the groundwork for novel treatments in neurological disorders.

Summaries of several of the related NPC faculty-led projects are provided on the following pages.

NinjaNIRS 2021: Progress towards whole head, high density fNIRS

Wearable functional near infrared spectroscopy (fNIRS) technology is an essential tool for neuroimaging. Recent advancements in the design of high density, fiberless fNIRS imaging systems have paved the way for accessible imaging modality, however weight and limited portability of these systems is still a concern. Efforts in the Boas lab by Bernhard Zimmerman, Joe O’Brien, Antonio Ortega Martinez, and Robert Bing are advancing an open source fNIRS system capable of high density optode arrangements that are modular and scalable for use in real-life applications.

The NinjaNIRS 2021 system is an advancement from their previous NinjaNIRS 2020 system described at https://openfnirs.org/hardware, utilizing an improved optode design with the same control unit and user interface. Two optode modules replace the combined source and detector optode from NinjaNIRS 2020: the first, a dual-wavelength LED source, and the second, a detector containing a PIN photodiode and 16-bit ADC. These optodes have a smaller footprint than the dual optode design allowing for minimum source-detector separation of 12 mm. The modified design allows for up to 8 sources and 12 detectors using the current control unit design with the potential to increase the number of sources and detectors controllable with a single control unit to 32 sources and 108 detectors. The new sources are packaged in a two-stage encapsulation with an epoxy potting compound protecting the electronic components and silicone rubber providing heat isolation and ergonomic improvement. The optical performance of the optodes was assessed through noise equivalent power (NEP) testing of the detectors and recording the optical power produced by the sources. This testing showed a NEP of 116 fW/√(Hz) on unencapsulated detector optodes and measured optical power from the sources of 5.5 ± 0.5 mW at 730 nm and 11.5 ± 0.5 mW at 850 nm. Human studies have revealed that the design has sufficient sensitivity to measure cardiac signals even through thick hair in the motor region on the scalp, however further refinement is required for use in subjects with especially dense, dark brown or black hair.

The addition of our single optode design in the new NinjaNIRS 2021 system has improved the customizability of probe design and enabled higher density measurements than were previously possible with our wearable fNIRS device. With the ability to perform high density measurements we are now seeking to increase the number of optodes that can be controlled by a single unit to allow for whole head, high density fNIRS measurements in a portable system that can be used in real world situations outside of the lab environment.

Caption: NinjaNIRS 2021 optodes. (a) Source optode PCB. (b) Detector optode PCB. (c) Fully encapsulated source optode.

Perception, Attention & Working Memory

Attention & working memory mechanisms have very limited capacity – approximately 4 items – and performance is highly susceptible to distractions. The everyday world presents much richer and more compelling distractions than the laboratory setting. Moreover, perception and attention are exploratory processes but key mechanisms are left unstudied in nearly all current laboratory-based cognitive neuroscience paradigms due to physical constraints placed on the observers ability to move their eyes and/or head. Therefore, these cognitive and perceptual mechanisms need to be studied in the everyday world. Using wearable fNIRS and integrated eye-tracking/scene camera technology, the NEIPA lab (PI: Somers) is developing and testing experimental paradigms and behavioral analysis methods in which the observer freely interacts with a 3-D environment, both in and out of the laboratory.

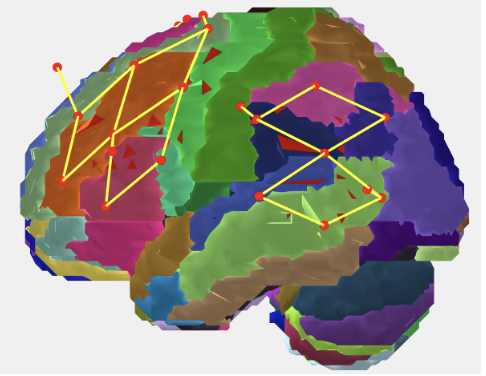

They are currently performing fNIRS experiments that measure bilateral frontal lobe activity during performance of visual and auditory attention and working memory tasks. These laboratory-based studies seek to replicate our fMRI observations of multiple sensory modality biased structures in frontal cortex (Michalka et al., 2015; Noyce et al., 2017; Noyce et al., 2021) using a mobile fNIRS methodology. Pilot fNIRS data (n=5) using the Techen system has replicated the observation of five of the sensory-biased frontal lobe structures found with fMRI. Primary data collection is now on-going with the NinjaNIRS cap and NIRX optodes. Establishing these fNIRS-based methods in a foundational step in taking neuroimaging studies of perception, attention and working memory out of the laboratory and into the everyday world.

Complex Scene Analysis (CSA) Project

The human brain has the impressive ability to selectively attend to specific objects in a complex scene with multiple objects. For example, at a crowded cocktail party, some listeners can look at a friend and hear what they are saying in the midst of other speakers, music, and background noise. Such multisensory filtering enabled by selective attention allows the listener to select and process important objects in a complex scene, a process known as Complex Scene Analysis (CSA). In stark contrast, many humans and state of the art machine hearing algorithms have great difficulty with CSA. Thus, developing a brain-inspired algorithm for CSA has the potential to enhance the performance of humans and machines.

Humans perform CSA by actively interacting with a complex scene cluttered with multiple objects; orienting via eye and head movements, attending to specific objects, and selectively processing them. However, most brain neuroimaging has been performed in unrealistic scenarios where subjects are physically restrained. In the CSA project, the team led by Kamal Sen are planning to employ the groundbreaking new wearable devices being developed as part of the NEW project to image the brain of humans freely interacting with complex audiovisual scenes. They will use this revolutionary paradigm to image human brain networks underlying CSA, using combined fNIRS and EEG. Exploiting the complementary spatial/temporal resolution of fNIRS/EEG, this thread will reveal where/when top-down attention signals arise in the human brain during CSA. They will then apply machine learning methods to the measured multimodal brain signals to decode the attended location in a complex scene.

Humans perform CSA by actively interacting with a complex scene cluttered with multiple objects; orienting via eye and head movements, attending to specific objects, and selectively processing them. However, most brain neuroimaging has been performed in unrealistic scenarios where subjects are physically restrained. In the CSA project, the team led by Kamal Sen are planning to employ the groundbreaking new wearable devices being developed as part of the NEW project to image the brain of humans freely interacting with complex audiovisual scenes. They will use this revolutionary paradigm to image human brain networks underlying CSA, using combined fNIRS and EEG. Exploiting the complementary spatial/temporal resolution of fNIRS/EEG, this thread will reveal where/when top-down attention signals arise in the human brain during CSA. They will then apply machine learning methods to the measured multimodal brain signals to decode the attended location in a complex scene.

Experimental Design: A computer with three monitors will be used for the experiments. The monitors will be located at the three locations: center, 45° to the left, and 45° to the right, equidistant from the center where the subject will be sitting upright (Figure 1).The monitors will display videos of human faces speaking sentences (Figure 2). The audio of the movie will be delivered via speakers at that location; or using headphones using sounds processed via head related transfer functions (HRTFs) to virtualize sound location for each monitor (center, 45° and -45°). The experiment will be divided into two parts. In Part 1, only one movie was played on each trial (“target alone” condition). In Part 2, all three monitors will be playing the movies simultaneously (“target + maskers” condition), but the subject will be instructed to attend only to the target location. At the end of each trial, the subject will be asked to identify the face of the speaker, and the sentence.

Conversation and Social Interaction

The Aphasia Research Laboratory at Boston University (PI: Swathi Kiran) is evaluating brain activation via fNIRS during conversation and social interaction in neurotypical individuals. In a conversation task, participants view and listen to a video recording of a woman asking conversational questions and are asked to respond to those questions. In a narrative task, participants watch short videos and are asked to provide a summary of each video clip. Both of these tasks were designed to isolate brain regions involved in language planning vs. brain regions involved in speech production. They have completed pilot data collection and are beginning to collect participant data. This work will provide a benchmark to help us understand how brain activation for conversation and narration may differ in individuals with neurological disorders.

Attention and Walking

Walking is a major part of everyday activity and comprises both voluntary movements (e.g., gait initiation/cessation, regulation of velocity and direction) and automatic processes (e.g., rhythmic limb movements during walking). Walking while avoiding obstacles depends on sensory input from the spatial environment and cognitive processes including continuous updating of one’s position relative to landmarks. The consequences of gait impairments are substantial and include increased disability, fall risk, and reduced quality of life. Gait abnormalities are exacerbated under dual-task conditions requiring simultaneous cognitive engagement because of high attentional demands and can be particularly difficult for individuals with cognitive difficulties. This joint project of the Vision and Cognition Lab (PI: Alice Cronin-Golomb) and the Center for Neurorehabilitation (PI: Terry Ellis) will assess walking under single- and dual-task conditions using increasingly naturalistic (real-world) designs and increasingly long brain activity recording times using fNIRS. These studies are designed to step from standard constrained lab studies (phase 1) to relatively unconstrained overground walking in a large lab space (phase 2) to the unconstrained real-world environment to be studied in phase 3. Brain activity and eye-tracking will be time-locked to timepoints of recorded verbal instructions while treadmill walking (W1) and to locations while free walking (W2). As they move from phase 1 forward, they will move from studying healthy young adults to healthy older adults and individuals with the movement disorder, Parkinson’s disease.

The purpose of phase 1 of this study is to use fNIRS to examine brain activity for single-task and dual-task walking under overground and treadmill conditions. Subjects will be randomized to start with the treadmill condition or overground walking (around a short-distance oval-shaped course). Each trial starts with 20 seconds of standing quietly, without talking or moving the head, followed by the instruction “start” (single-task walking) or “start with (number)” (dual-task walking while subtracting 3’s from a given number), at which time the treadmill or overground walking begins and lasts for 30 seconds. The sequence of 20 seconds of standing quietly and 30 seconds of walking repeats so each subject performs eight trials of each condition.

Neuromotor Recovery

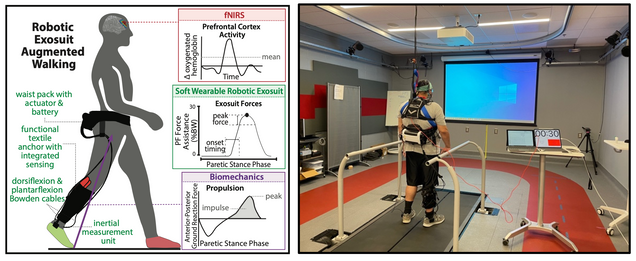

The Neuromotor Recovery Laboratory at Boston University (PI: Lou Awad) is developing and evaluating wearable sensing and robotic technologies for people with difficulty walking due to neuromotor impairments, such as those resulting after stroke. Together with clinical and engineering scientists from the Center for Neurophotonics and the Center for Neurorehabilitation, NRT fellow and Sargent College graduate student, Regina Sloutsky, is working to combine fNIRS neuroimaging with wearable inertial sensors and movement detection algorithms to study how people with post-stroke hemiparesis use soft robotic exosuits during everyday walking activities. Research to date shows that these next generation wearable robots can help people post-stroke walk faster, farther, and with less effort. With fNIRS neuroimaging of prefrontal cortex, we now have evidence that soft robotic exosuits can be tuned to reduce the cognitive effort that people post-stroke require during walking. This research received the Best Paper Award at the 2021 IEEE Conference on Neural Engineering. Building on these promising findings, our research is now focused on developing new algorithms to (i) synthesize measurements of human-machine interaction collected by fNIRS and the inertial and force sensors embedded in soft robotic exosuits to (ii) automate a patient-tailored approach to prescribing assistive robotic actuation. The integration of these data streams allows concurrent consideration of multiple salient variables: comfort, biomechanics, and function. This work has the potential to accelerate the ubiquitous adoption of powered wearable actuation technologies for use in the everyday world.

fNIRS to Study Autism Spectrum Disorder

While previous work has explored the neural bases of language processing in typically developing (TD) children and children with Autism Spectrum Disorder (ASD), little is known about how the brains of children function within real-world language environments. This project, led by Meredith Pecukonis with Professor Tager-Flusberg, will use functional near-infrared spectroscopy (fNIRS) to investigate how the brains of preschool-aged children with and without ASD function during a live social interaction (i.e., shared book reading activity; see Figure), and to examine how two measures of brain function (brain response and functional connectivity) relate to behaviorally-measured language and communication abilities. Findings will elucidate the neural mechanisms underlying language deficits and heterogeneous language outcomes in ASD, and may provide further insights into how the brain functions during different types of language interventions. This project is funded by the NIDCD (F31 DC019562).