Easy, Effective, Efficient: GPU programming with PyOpenCL and PyCUDA

by Andreas Klöckner

Courant Institute of Mathematics, New York University

Why this course?

High-level scripting languages are in many ways polar opposites to GPUs. GPUs are highly parallel, subject to hardware subtleties, designed for maximum throughput, and they offer a tremendous advantage in the performance achievable for a significant number of computational problems.

On the other hand, scripting languages such as Python favor ease of use over computational speed and do not generally emphasize parallelism. PyOpenCL and PyCUDA are two packages that attempt to join the two together. This course aims to show you that by combining these opposites, a programming environment is created that is greater than just the sum of its two parts.

PyOpenCL (and also PyCUDA) can be used in a large number of roles, for example as a prototyping and exploration tool, as an optimization helper, as a bridge to the GPU for existing legacy codes, or, perhaps most excitingly, to support an unconventional hybrid way of writing high-performance codes, in which a high-level controller generates and supervises the execution of low-level (but high-performance) computation tasks to be carried out on varied GPU or GPU-based computational infrastructure.

You will learn about each of these roles and how to best make use of what PyOpenCL and PyCUDA have to offer. In doing so, you will also gain familiarity with the OpenCL computation interface.

About the course

This course will build upon the knowledge you gain at PASI. In particular, we will use your familiarity with CUDA to learn about OpenCL, and your experience with Python and Numpy to make the connection to PyOpenCL and PyCUDA.

The class will take place in three sessions, with material partitioned roughly partitioned as follows:

- Lecture 1:

- Introduction to OpenCL based on CUDA knowledge;

- the OpenCL Universe: CL on CPUs and various GPUs;

- PyOpenCL: OpenCL Scripting Mechanics for GPU and multi-core.

- Lecture 2:

- Motivation for hybrid codes: GPUs and Scripting;

- GPU arrays, custom element-wise and reductive operations;

- run-time code generation: the How and Why;

- advanced code generation: templating;

- Heuristics and search patterns for automated tuning, performance measurement

- Lecture 3:

- PyOpenCL and mpi4py;

- a look at PyCUDA;

- PyCUDA and existing (C-based) CUDA libraries.

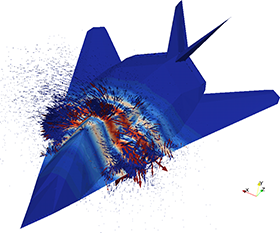

- An example: Gas dynamics and EM on complex geometries.

Depending on time and demand, a lab class in the use of PyOpenCL may be offered.