How Do We Know When We Know Something?

Before beginning today’s note, an acknowledgement, once again, of recent tragedy. On Tuesday, New York City suffered its deadliest terror attack since 9/11, when a man drove a truck into a pedestrian bike path, killing eight people and hospitalizing at least 11. Like recent attacks in Paris and Brussels, this incident appears to have been motivated by religious fanaticism and hate. The victims were from several countries, including Belgium and Argentina. This speaks to the international character of a city, my former home, that is proudly heterogeneous. Hopefully, this senseless act will catalyze a deeper appreciation of the cultural and religious pluralism embodied by New York, at a time when these values feel more vulnerable, and necessary, than ever.

Moving on, with sadness, to today’s note. Throughout my career, I have been struck by a phenomenon: Whenever I feel I have truly been immersed in a topic, when I feel like I know as much as there is to know about it, that is when I realize, inevitably, how much I do not know. This is, perhaps, akin to a broader observation about the generation of science. We are privileged to generate ideas for a living; one of the ways we pay for this privilege is by being keenly aware, perhaps more than most, of how little we actually know about many subjects. We are, for example, still studying the effect of marijuana legalization on public health. We do not know nearly enough about the population health effect of firearms, beyond the statistics of death and injury with which we have become so familiar. The list goes on, and is long.

But there are also areas where we do know we are correct, and certainly know enough to inform action, even if we need to learn more around the edges. We do know, for example, that climate change is real, we do know that vaccines are safe and effective, we do know that the theory of evolution is true. Yet in each of these cases, politics has pushed back against the facts, making it harder for us to act on our knowledge. This pushback will often take the form of partisan actors saying that a given issue is still a matter of scientific debate, manufacturing doubt when the issue is, in fact, a settled matter. This can be exasperating for us, in the profession, to hear, but it also begs a useful question: When does “the preponderance of science” tip the scales so we can definitively say “we know this?” The better we can answer this, the better we can catalyze data-inspired action, and show why politically motivated efforts to distort science are dishonest and counterproductive.

On achieving scientific consensus

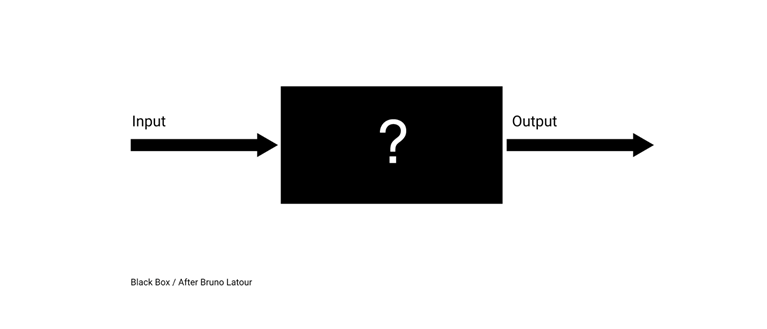

It seems to me that the starting question needs to be: How do we define “scientific consensus” and decide, based on this consensus, that something is “true”? In his classic book Science in Action: How to Follow Scientists and Engineers Through Society, the sociologist and philosopher Bruno Latour introduces the concept of “the black box” to represent a scientific conclusion that is widely accepted as true. The phrase is borrowed from cyberneticians. It is a kind of shorthand—whenever a machine system or command set is unduly complex, a box may be drawn around it to indicate that all that is necessary to know is its input and output (Figure 1).

Haunted Machines an Origin Story (Long). Occasional blog of Tobias Revell Web site. http://blog.tobiasrevell.com/2015/07/haunted-machines-origin-story-long.html Accessed October 2, 2017.

The box can still be “opened” and the integrity of its contents examined, but marking it off in this way says that these components have already received ample scrutiny and been found to be sound. Latour relates this to science by using the example of the double helix. There was a time when no one knew for sure how DNA is structured (see, for example, the classic paper by Linus Pauling suggesting a triple helix). It was only after a rigorous process of scientific examination that the double helix shape came to be accepted and utilized as the basis for all further study of genetics. It is now a “black box.” While this does not mean that the double helix shape cannot be questioned, implicit in the scientific consensus is the understanding that such questions would be a retracing of steps, rather than a forging of new paths. In this sense, what was once just theory has become accepted fact, achieving what Trevor Pinch and Wiebe Bijker have called “closure.”

A more nuanced perspective offered by Uri Shwed and Peter Bearman suggests three trajectories for scientific consensus. The first is a “spiral” trajectory, whereby “substantive questions are answered and revisited at a higher level.” That is, we have reached consensus on the core issues and are now elaborating on nuance, with earlier, answered questions leading to new, unanswered ones. An example of this is our growing understanding of the link between income and health, one of the most widely accepted associations in our field. At core, the relationship between income and health is defined by factors like access to nutritious food, quality education, and health insurance. With the basics of this link well-established, new research is moving our understanding of it to a higher level. We have begun to trace, for example, the correlation between widening income gaps and widening health gaps in the US, and to examine how poverty, in particular, drives this inequality. Second is the “cyclical” trajectory, whereby “similar questions are revisited without stable closure.” This is the trajectory of the salt debate, where the question of salt’s effect on health is continually revisited, with closure not yet in reach. This poses a challenge, because while consensus may indeed be reachable, it nevertheless falls just beyond our grasp as we keep visiting and revisiting the same questions. The third trajectory is a “flat” trajectory, whereby “there is no real scientific contestation.” That is: We know the answer, the question is closed, and argument may exist only to serve non-scientific ends. An example of this is the safety and effectiveness of vaccines, which is only contested by those employing bad science and roundly debunked arguments.

The challenges in getting to closure

Getting to “closure” is, therefore, a process of iterative generation of science, whereby scientists eventually, through refutation, argument, and agreement, decide what is in the “black box.” There are, as I see it, two impediments to this closure.

The first arises when entrenched groups dig in on opposing sides of an issue, polarizing the debate. This polarization is not to be confused with the disagreement and exchange of ideas that are the lifeblood of any worthwhile scientific endeavor. Such an exchange depends on dialogue between people with different views testing what they think they know against the opposing views of others. Polarization occurs when this dialogue breaks down, and researchers confine their outreach only to those who share their perspective; this results in the classic “cyclical trajectory” noted earlier. To the point, in researching the debate over salt’s effect on population health, we found that authors were 50 percent likelier to cite papers that shared their position on the subject. This creates a situation where consensus emerges not within science as a whole, but within two opposing camps, each with little interest in communicating with the other.

The second challenge is when scientists achieve consensus, arriving at a “flat” trajectory, only to find it rendered effectively moot by sociopolitical factors. This is touched on by Pinch and Bijker, who, writing about technological development, stress the importance of the broader sociological context in assigning value to a given “artifact.” Even if research produces a device, or a conclusion that is well-constructed and meets what its makers consider to be an urgent societal need, these efforts will amount to little if society is uninterested in what science has to say. There are many examples of this in the historical record, including most saliently in the early challenges around establishing tobacco as a cause of lung cancer. Relatedly, perhaps the most pressing current example of this is climate change. Though the reality of climate change is overwhelmingly backed by the scientific consensus, the refusal of certain lawmakers and their constituents to accept these data has stymied progress on this issue.

Moving beyond doubt to knowing and acting

Given these challenges, and the ever-present uncertainty surrounding all scientific inquiry, it is important for us to recognize that there are times when we do indeed know something, and to be able to communicate to the world why we are confident in this knowledge.

As with many other matters, Socrates foresaw this issue in Plato’s Theaetetus, and suggested that knowledge could be defined as “true belief with an account.” I have written before about how this “account” might be interpreted as the scientific method by which we test our theories and arrive, ultimately, at our conclusions. But there is another meaning to “account,” a word which can also refer to “a statement explaining one’s conduct.” For all the uncertainty that can sometimes characterize scientific inquiry, there are also occasions when we do know something, and when we also know how we know it. In an age when politics and culture exercise a kind of veto power over even our most carefully considered findings, there is value in knowing when we, collectively, are right. Understanding the nuances that get us to scientific consensus may help clarify our thinking to this end. To be able to recognize when scientific consensus points us towards a sound conclusion empowers us to act boldly on behalf of measures that we know will improve the health of populations.

I hope everyone has a terrific week. Until next week.

Warm regards,

Sandro

Sandro Galea, MD, DrPH

Dean and Robert A. Knox Professor

Boston University School of Public Health

Twitter: @sandrogalea

Acknowledgement: I am grateful to Eric DelGizzo for his contributions to this Dean’s Note.

Previous Dean’s Notes are archived at: https://www.bu.edu/sph/tag/deans-note/